You R. Name

Affiliations. Address. Contacts. Moto. Etc.

555 your office number

123 your address street

Your City, State 12345

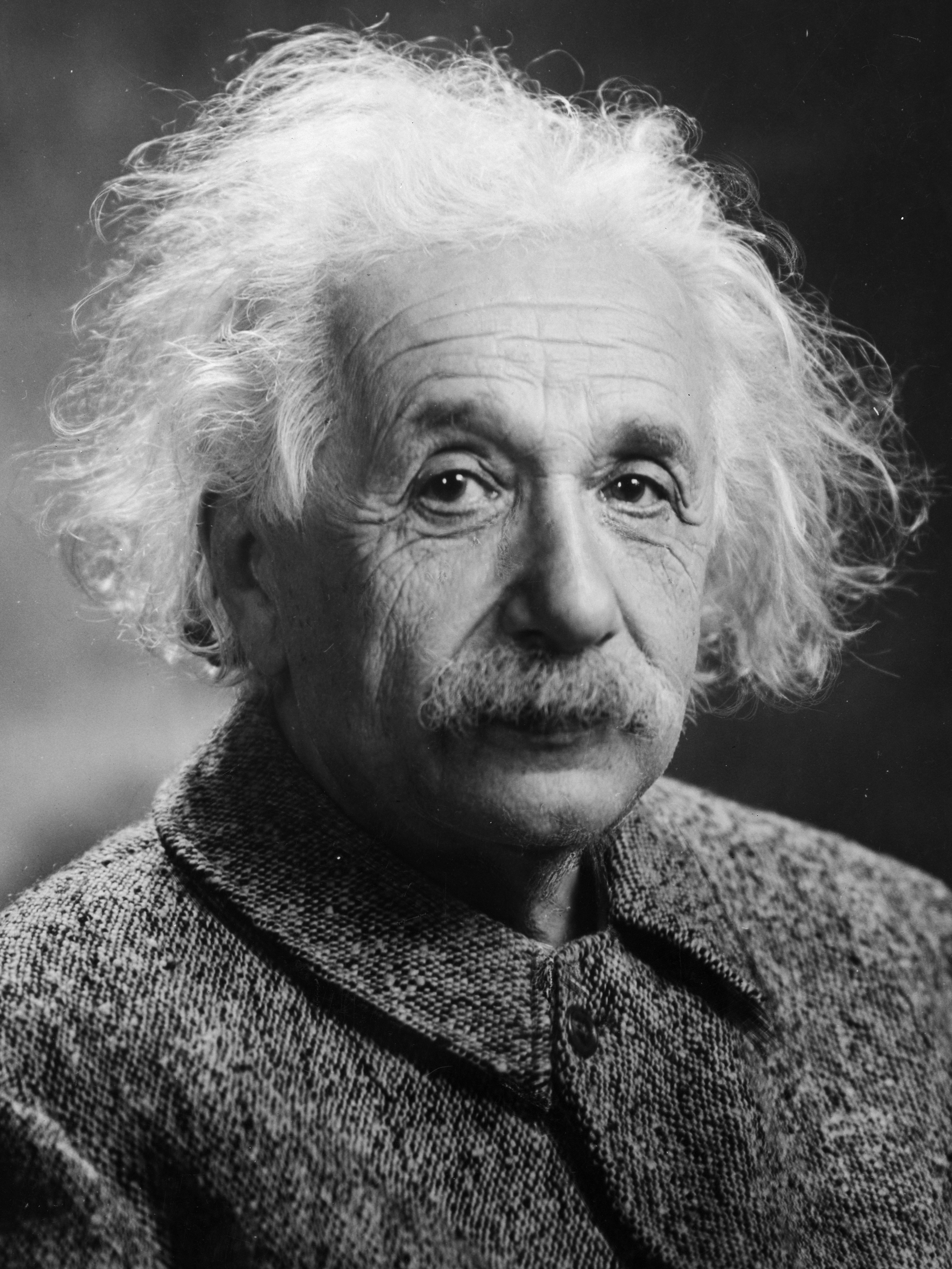

Write your biography here. Tell the world about yourself. Link to your favorite subreddit. You can put a picture in, too. The code is already in, just name your picture prof_pic.jpg and put it in the img/ folder.

Put your address / P.O. box / other info right below your picture. You can also disable any of these elements by editing profile property of the YAML header of your _pages/about.md. Edit _bibliography/papers.bib and Jekyll will render your publications page automatically.

Link to your social media connections, too. This theme is set up to use Font Awesome icons and Academicons, like the ones below. Add your Facebook, Twitter, LinkedIn, Google Scholar, or just disable all of them.

news

| Jan 15, 2016 | A simple inline announcement with Markdown emoji! |

|---|---|

| Nov 07, 2015 | A long announcement with details |

| Oct 22, 2015 | A simple inline announcement. |

latest posts

| Jan 27, 2024 | a post with code diff |

|---|---|

| Jan 27, 2024 | a post with advanced image components |

| Jan 27, 2024 | a post with vega lite |